Akash

Decentralized Cloud

Akash is an on-chain auction marketplace for off-chain container deployments. Targeting current cloud providers (eg. AWS and Google Cloud), Akash provides the tools to utilize idle datacenter capacity, and provide a cheaper alternative.

For users, the interface will be similar to any other cloud compute platform. Users define a deployment criteria (resources and price).

For providers, the onboarding process involves installing the Akash agent (based on CoreOS) on idle servers and programmatically bidding on new deployments.

Token metrics

tbd

Market opportunity/Competitive analysis

There are several competitors in this space at varying stages of progress, but most are targeting supercomputer or highly distributed workloads whereas Akash is targeting more common enterprise workloads (eg. running an application server or anything you might deploy onto AWS or Google Cloud).

- Golem: decentralized supercomputer

- Sonm: focusing on more distributed computing (eg. machine learning, cdn/video streaming, video rendering)

- iExec: Ethereum cloud made for DApps and very large parallel applications (HPC, AI, big data, scientific sims, 3d rendering, fog computing)

- Dfinity: decentralized supercomputer

- Hypernet: “tailored for large-scale distributed compute environments”

- 0chain

Akash has a blog post regarding their competition here.

The only real competitor I know of that is targeting similar workloads is

- Symbioses: the team has told me that they are targeting not only supercomputer workloads but also general purpose workloads. More info upcoming in another post.

Akash also mentioned that part of their market is technology providers who don’t yet offer hosted/managed services.

Go to market strategy: CI/CD + testing, since it is usually a less critical system. I think it’s a great way to build a developer community and an easy sell to companies if the integration is simple.

Team/Advisors/Partnerships

(Akash is a project by Overclock Labs)

- Greg Osuri, (Founder & CEO)

- Cofounder & CTO at AngelHack

- Adam Bozanich, (Founder & CTO)

- Previously Cofounder and Tech Lead at Sprouts Tech

- holds a US patent for network protocol fuzzing

- Gregory Gopman, (Cofounder)

- CEO of ICO Roadshows

- Cofounder of AngelHack

- Bachelor’s Marketing at Univ. of Florida ‘07

- Aaron Stein, (Software Engineer)

- BS Chem. Eng. at UC Riverside ‘15

- Munjal C. Shah, (Business Development)

- Cofounder at Cross Border Deal Flow

- BA Chemistry & Psychology at Northwestern University ‘09

- Nick Alesandro, (VP of Product)

- BS Nutritional Science at Cornell ‘91

- Allison Silber, (Head of Operations)

Advisors and Investors

- CrunchFund

- Hone

- Auren Hoffman (Frm. CEO of LiveRamp, Frm. Cofounder of BrightRoll)

- Steven Fan (Head of Investments, Tencent)

- Charles Songhurst (Frm. Head of Global Strategy, Microsoft)

- Jaron Lukasiewicz (Former CEO of Coinsetter)

- Brandon Goldman (Frm. Founder, Freshpay, Frm. Lead Software Architect, Blockfolio)

Akash has 5 companies in pilot, including Packet, Life360, and Polyverse.

Competitive Advantage

Some of the advisors are founders of companies that have underutilized datacenters, or want to use Akash to lower their costs.

Greg Osuri (CEO) is Cofounder of AngelHack, which means Akash has immediate access to a huge pool of engineering talent.

Mostly compatible with Kubernetes configuration, so migration/onboarding should be simple. Easy for potential tenants to scale their workloads in from existing cloud platforms.

Tokenomics and Token Utility

Medium of exchange: providers and users interact across an auction system, where providers set a minimum price for their resources and users set a maximum price for needed resources.

Staking: anyone can join the network, so there is risk of bad actors.

Tenant, Datacenter (providers), Validator (DPoS consensus), Marketplace Facilitator (runs auction)

Code

https://github.com/ovrclk/akash

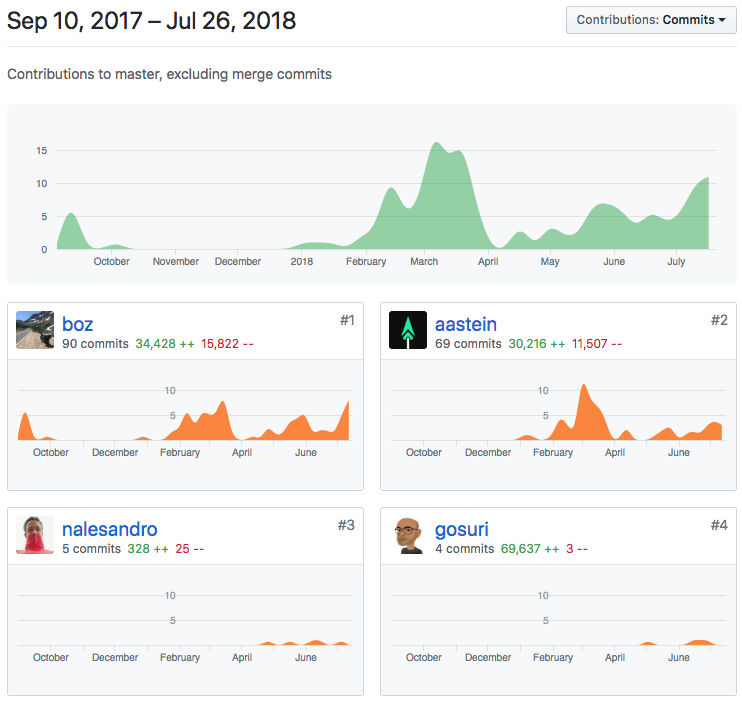

This is good - the CEO, CTO, and main engineer have the most contributions in this git repo, and the project was started September 2017.

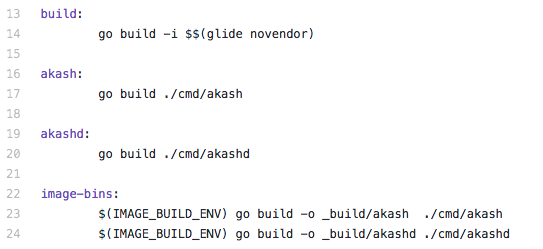

First let’s take a look at the Makefile, to know where to begin.

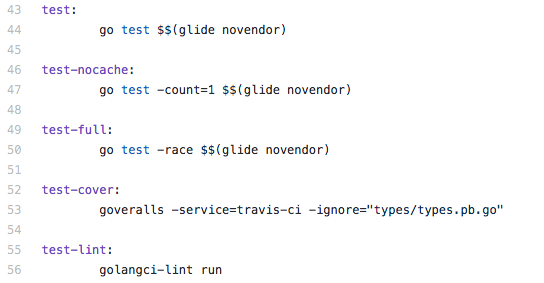

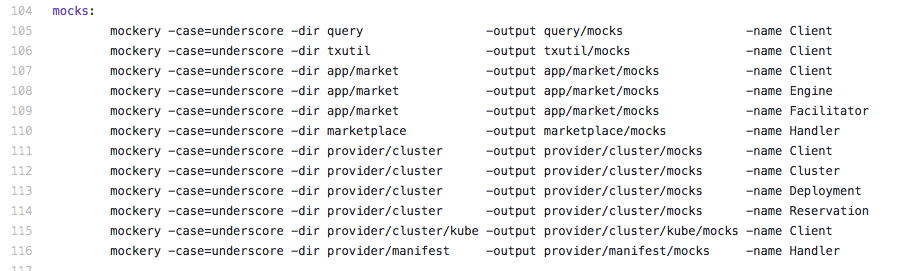

Also, look! They have make commands referring to tests and mocks. Tests and mocks are a great sign the team has engineering experience. The repo has tests and mocks sprinkled throughout.

Nice!

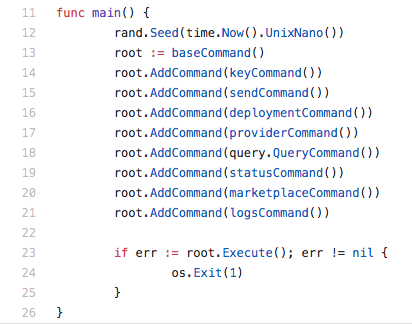

The Makefile refers to /cmd/akash/main.go, which seems to be run by both providers and tenants.

It loads the following files:

/cmd/akash/key.go: manage keys

/cmd/akash/send.go: send tokens as a transaction

/cmd/akash/deployment.go: send deployment configuration as a transaction and waits for a Fulfillment and Lease. Once Lease is created, the manifest is sent over to the provider. This file also contains functions to update and close deployments.

/cmd/akash/provider.go: create keys for provider, and use Kubernetes to run task. close Fulfillment and Leases.

/cmd/akash/query/*: contains functions for getting info on account, deployment, fulfillment, lease, order, and provider.

/cmd/akash/status.go: Get latest block height and latest block hash

/cmd/akash/marketplace.go: Listens to events on the marketplace

/cmd/akash/logs.go: Logging utils

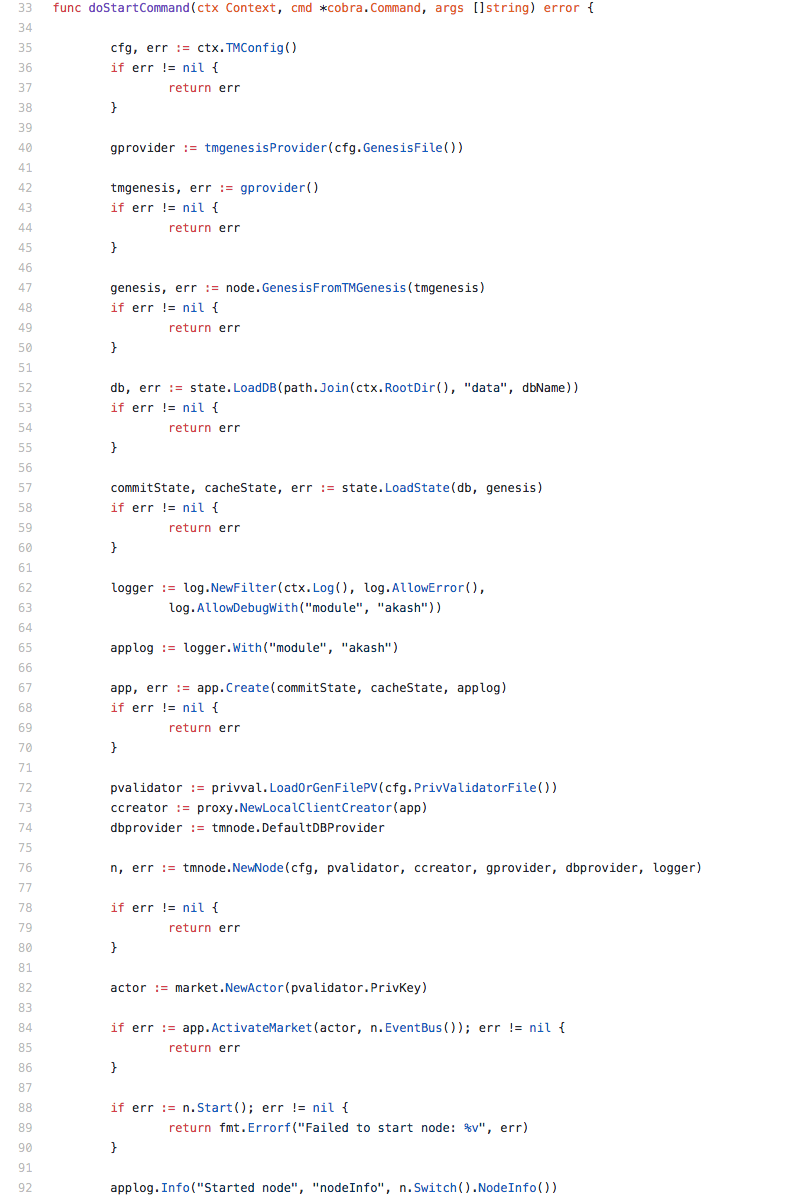

The Makefile also refers to /cmd/akashd/main.go, which seems to be run by validators. The files in this directory initialize the node, using Tendermint:

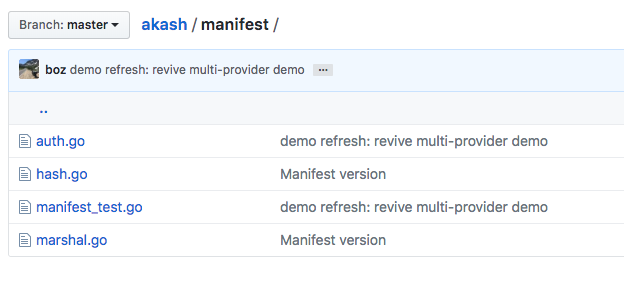

/manifest: signing, verifying, and parsing manifests. Code makes sense.

/marketplace: PubSub subscriber to monitor all transaction events and call individual handlers for each step of a deployment lifecycle. The events come from /provider/event/tx.go.

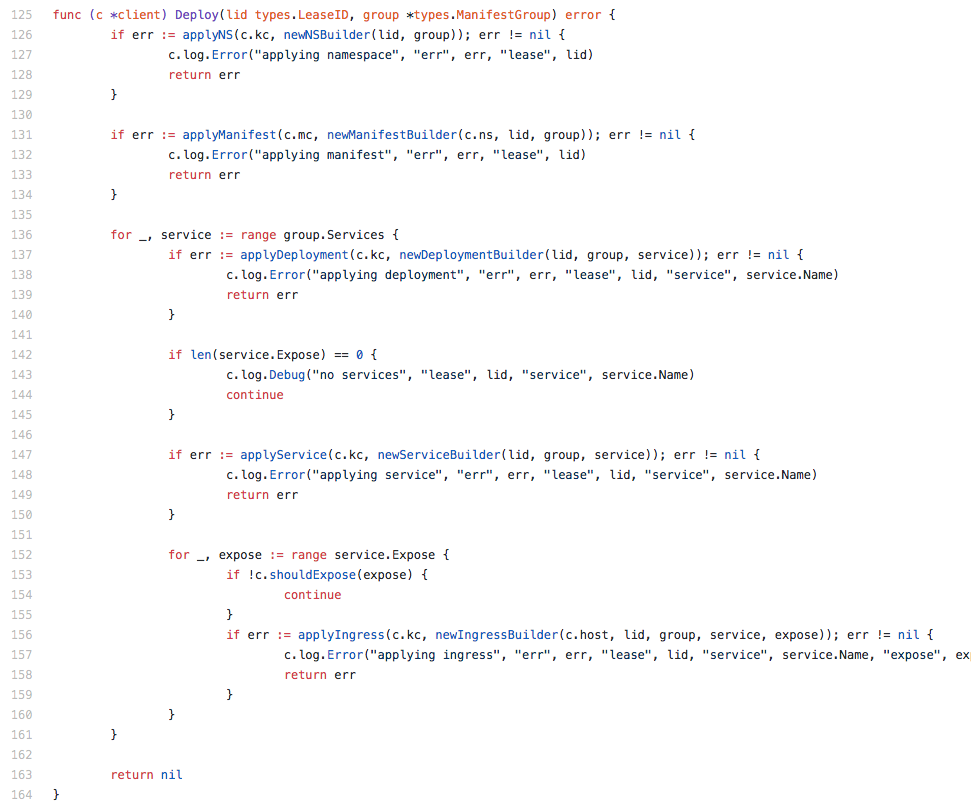

/provider: The code here includes a bid engine, an RPC, and a manifest handler. In addition, this directory contains a key part — the compute cluster management code — in /provider/cluster.

Lastly, I love that they already have CI/CD and coverage workflow all set up.

Conclusion

The code that Akash has is already clearly test-net ready, and includes basically everything the whitepaper says it is supposed to have. It’s a lot simpler than the competitors listed above, but that’s irrelevant because Akash is targeting general purpose workloads instead of supercomputer workloads.

A simplified explanation of what has been created is a blockchain based on Tendermint, with a protocol for broadcasting and negotiating computing resources, managed by a Kubernetes backend.

However, there is 1 thing I wish Akash had, which is allowing general purpose tasks to be executed on GPUs. I think this is something Symbioses has developed already, which gives them a larger pool of data centers available to them (ie all the existing GPU mining facilities).

Other Info

Questions for the team:

How to verify outcome of computation?

- Kubernetes logging, most likely. For a majority of jobs, this will need to be handled on the application side by the tenant (eg. error codes, 400/500, latency logging).

What does the Manifest file for deployment look like?

- Similar to kubernetes config files and dockerfiles

When is the public version available?

- “Next few days” as of 07/25/2018

Is there benchmarking done for each agent re: latency, cpu speed, memory, storage?

- Benchmarking is a bit difficult because it can be easy for people to fake. there’s a few options that we’re exploring but the current plan is to encourage people to deploy a healthcheck system as part of their deployment.

How is storage dealt with? eg. for machine learning workloads that need a ton of data on my local machine, can the network gain access directly, or do I need to upload to ipfs first?

- Built-in block storage is currently for “ephemeral” disk space offfered by the providers. Outbound networking is not restricted at the moment so you are free to fetch data from s3 or ipfs if necessary.

Do GPU workloads work yet / planned to be supported? I know Google cloud kubernetes supported gpu with their custom addon, but wasn’t sure if open source kubernetes supports it

- Planned! Docker now supports it — means kubernetes as well

Do you have a testnet demo that can be shared before the public version is available?

« Origo Network

Metadium »